To summarize, using data/value objects and method invocations extensively (such as constructors, getters, setters etc.) does come at significant performance cost. Total improvement was a result of many small improvements and analysis how each step affected the performance.

Jprofiler cost code#

This result was achieved despite the fact that the initial code did seem as optimal implementation on the first glance. Thus the inner loop code was rewritten: SolutionLineItem solutionLineItem = priceQuantityArray Ĭollectively, all optimizations reduced the test case runtime from 60 seconds to 13 seconds (for single threaded implementation) or 5x improvement, and 2.9 seconds for multithreaded implementation or 20x improvement. So the solution was to construct in advance all SolutionLineItem objects that we might need, since we had limited numbers of quantity/price combinations. And in most cases the new object was thrown away at the end of the permutation processing. Changing this to empty constructor and using setters was even worse. This is a simple data class whose constructor was created by lombok annotation. This helped, reducing running time to just 22īut profiler has revealed another, surprising code feature: almost third of the remaining time was spent calling a simple constructor: SolutionLineItem solutionLineItem = new SolutionLineItem(quantity, price) Again, I rewrote the code with assigning local variables that could be reused. Getting back to profiler, I noticed significant time was again spent in calling various getter methods.

Reducing the test case runtime to 28 seconds (for a single thread).

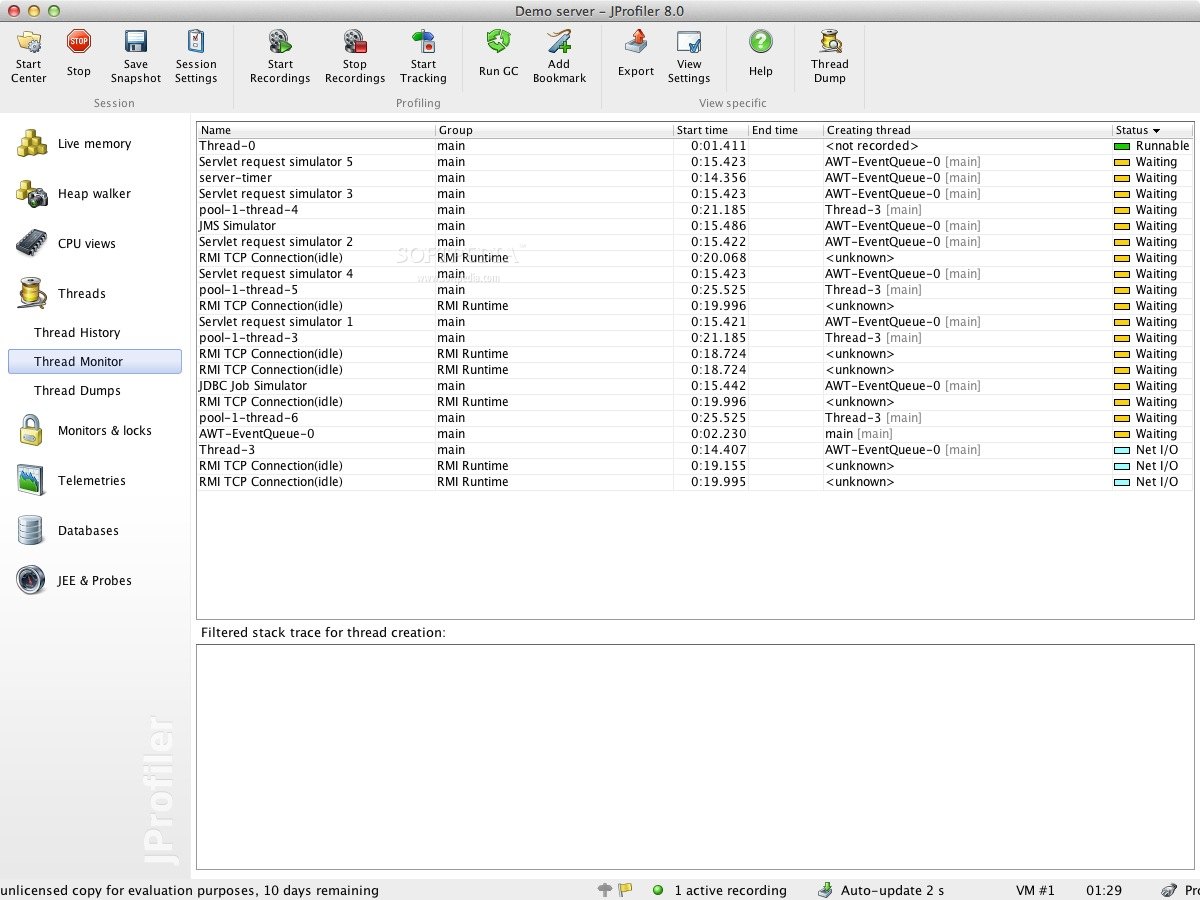

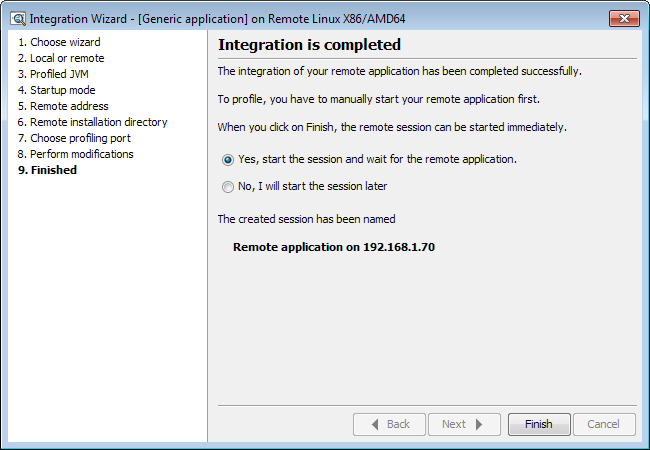

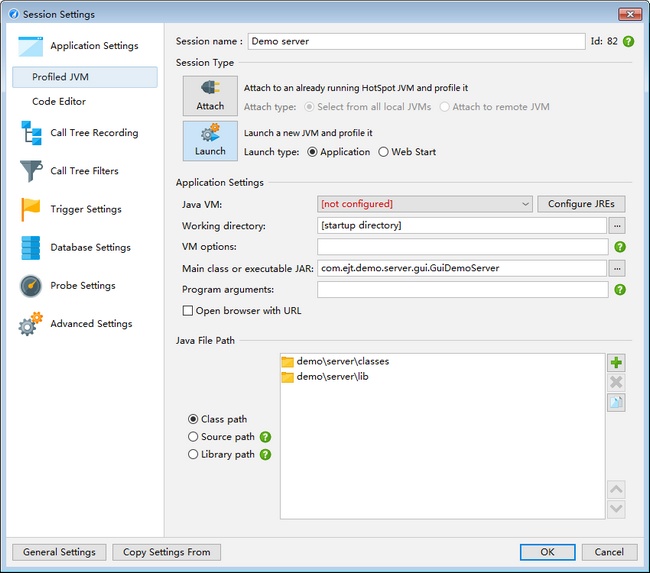

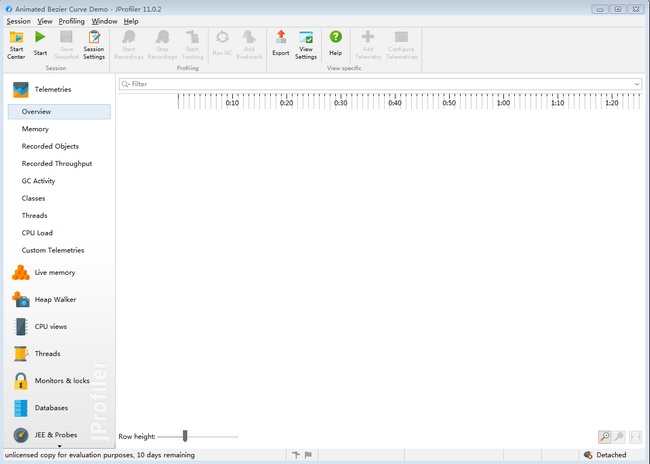

These two changes more than doubled the performance, (Hash)Set was also gone and replaced by direct, iterative search over the array. But as the search space was rather short (up to 32 objects), code was rewritten using plain old java arrays and using integer based indexing to access the arrays. HashSets were used to lookup objects and ArrayLists to add and iterate over objects. So this single change brought 20% optimization.Īlmost 50% of the runtime was spent in various Collections API methods. So logging can have significant performance impact even with disabled appenders, especially in tight loops. The easy improvement was related to logging statement: log.debug("permutation So after switching to instrumentation, (which really slowed down the test case execution time by 5x factor), problematic statements were quickly identified. I refactored code to have most of the inner loop code split into individual method calls, so that we could better count the number of invocations and time spent at each method.įirst the recommended CPU sampling method was used during profiling, but that did not really provide enough insight of the innermost running loop. To get a better insight into the running code, we looked at the profilers, and selected a JProfiler (available at ).

Although my processor supports hyperthreading, in this case the performance seemed to be proportional to the number of cores, and not number of supported hyper-threads.

That indeed helped, reducing the runtime almost fivefold to 13 seconds using ten running threads on six core processor. This change required additional synchronized code portions, since it needed to maintain a sorted list of best solutions found during the solution search. Thus it was not able to use multiple cores available in the machine.Ĭode was refactored to use method annotation and CompletableFuture invocations to be able to split execution into multiple, independent batches. The cause was obvious – the implementation was running in a single thread. Our test case included 2^25 (33 million) iterations for which the initial algorithmic solution took 60 seconds of runtime on the development machine.įirst thing that was noticed running a test case that the CPU was not utilized 100%, but a single core was. While we managed to implement a suitable algorithm that was on the edge of acceptability, we wanted to use this opportunity to push a little bit further and implement low level optimizations. Recently we had a unique challenge of implementing a custom calculation/solution search algorithm for which we did not find any suitable algorithm or library.

0 kommentar(er)

0 kommentar(er)